You launch the Spark session, and write ACID, managed tables to Apache Hive.Īs an Apache Spark developer, you learn the code constructs for executing Apache Hive queries using the HiveWarehouseSession API. You also need to configure file level permissions on tables for users.Ī step-by-step procedure walks you through choosing one mode or another, starting the Apache Spark session, and executing a read of Apache Hive ACID, managed tables.Ī step-by-step procedure walks you through connecting to HiveServer (HS2) to write tables from Spark, which is recommended for production.

DOWNLOAD SPARK LLAP JAR HOW TO

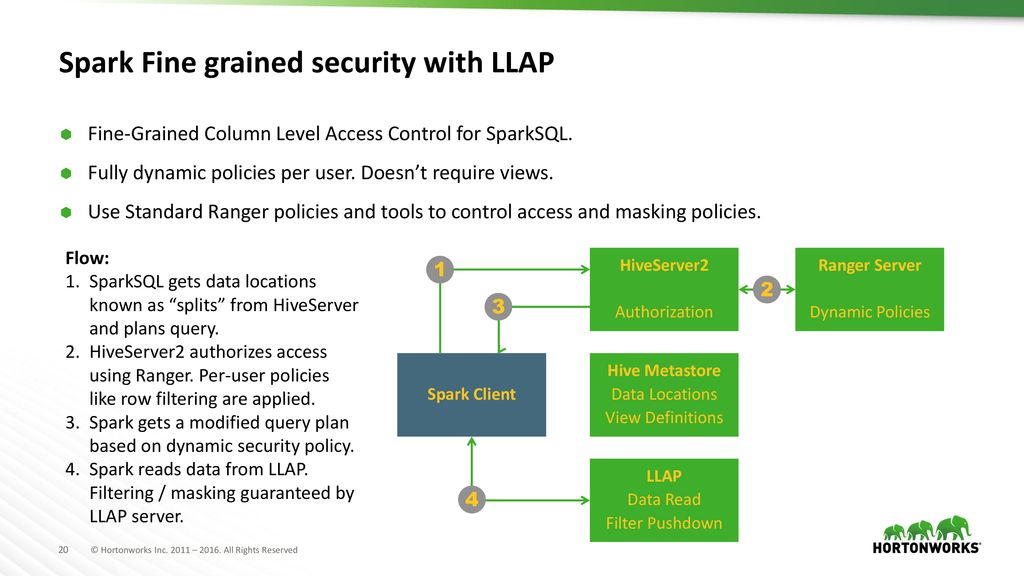

Configuring external file authorizationĪs Administrator, you need to know how to configure properties in Cloudera Manager for read and write authorization to Apache Hive external tables from Apache Spark.You learn how to configure and which parameters to set for a Kerberos-secure HWC connection for querying the Hive metastore from Spark. An example shows how to configure this mode while launching the Spark shell. In two steps, you configure Apache Spark to connect to HiveServer (HS2). An example shows how to configure Spark Direct Reader mode while launching the Spark shell. In a two-step procedure, you see how to configure Apache Spark to connect to the Apache Hive metastore. In a single step, you configure Auto Translate and submit an application. You need to know the prerequisites for using Auto Translate to select an execution mode transparently, based on your query. You read about which configuration provides fine-grained access control, such as column masking. You can see, graphically, how the configuration affects the query authorization process and your security. Using Hive Warehouse Connector with Oozie Spark ActionĪ comparison of each execution mode helps you make HWC configuration choices.

Blog: Enabling high-speed Spark direct reader for Apache Hive ACID tables.See the workaround in Cloudera Oozie documentation (linkīelow). Packages are shaded inside the HWC JAR to make Hive Warehouse Connector work with Spark and Thrift in Hive is complicated and may not be resolved in the near future. Hive and Spark use different Thrift versions and are incompatible with each other. Workaround for using the Hive Warehouse Connector with Oozie Spark action Mode("overwrite").option("table", "t1").save Supported scala> val df = hive.executeQuery("select * from t1") That table using an HWC API write method, a deadlock state might occur. If your query accesses only one table and you try to overwrite When the HWC API save mode is overwrite, writes are limited.The Hive Union types are not supported.Table stats (basic stats and column stats) are not generated when you write a DataFrame.The spark thrift server is not supported.HWC supports reading tables in any format, but currently supports writing tables in ORC.Transaction semantics of Spark RDDs are not ensured when using Spark Direct Reader to.You cannot write data using Spark Direct Reader.Scala> val df = ("HiveAcid").options(Map("table" -> "default.acidtbl")).load() If youĭo not have permission to access the file system, and you want to purge table data in addition If you do not use HWC, dropping an external table deletes only the metadata from HMS. You can set an option to also drop the actualĭata in files, or not, from the file system. Dropping anĮxternal table deletes the metadata from HMS. If you use HWC to create theĮxternal table, HMS keeps track of the location of table names and columns. From Spark, using HWC you can write HiveĬreating an external table stores only the metadata in HMS. Hive external tables in ORC or Parquet formats. You might want to use HWC to purge external table files. You do not need HWC to read or write Hive external tables. HWC implicitly reads tables when you run a Spark SQL query on a Hive managed HiveWarehouseConnector API to write to managed tables. You want to access Hive managed tables from Spark.

DOWNLOAD SPARK LLAP JAR SOFTWARE

HWC is software for securely accessing Hive tables from Spark. Perform, including how to write to a Hive ACID table or write a DataFrame from Examples of supported APIs, such as Spark SQL, show some operations you can I also tried to execute this using Azure ADF Pipeline, i am getting the same issue (through ADF Pipeline by uploading the respective Jar file into blobstore).Īlso I have tried to use the exclude statements like below however it is not working.You need to understand Hive Warehouse Connector (HWC) to query Apache Hive tablesįrom Apache Spark.

Created a class file and submit this class file to cluster using IDE (By click on the link "Submit myspark application to I have used the below versions for creation of new project using intelliJ with SBT. We need to execute the SCALA activity(JAR File) through Azure ADF Pipeline. Can any one help me on the below issue regarding "Class path contains multiple SLF4J bindings"

0 kommentar(er)

0 kommentar(er)